Dependency Management in Infrastructure as Code - Part Two

Intro

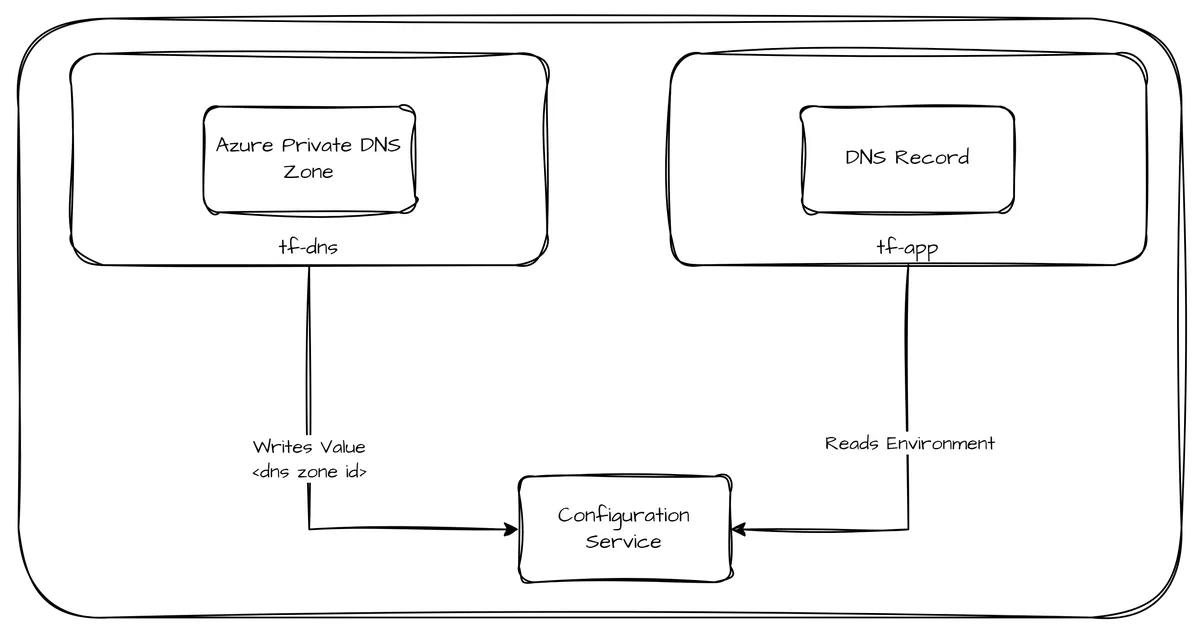

This is the second part in a series of two posts outlining alternatives when managing dependencies between different Infrastructure as Code (IaC) configurations. In this part, we'll be covering managing dependencies between two Terraform configurations using Pulumi ESC as central configuration service.

Scenario

In most Hub-Spoke-architectures, responsibilities for managing DNS zones and records are separated between platform and application teams. Therefore, we create two Terraform configurations, one to deploy a Private DNS Zone on Azure and another one registering a record within the created zone.

The required information about the Private DNS Zone (Azure Resource Manager ID) will be written to a central environment after deployment from where it will be read from the application configuration.

Components

Repository

All configuration and script files are stored in the companion repository available at Github.

Terraform/Terragrunt Configurations

TF-DNS

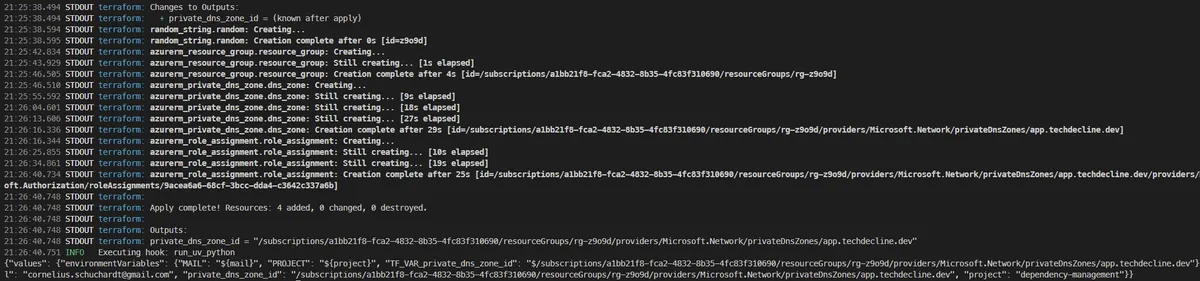

The DNS Zone will be deployed from a Terraform configuration that is wrapped within a Terragrunt Project.

The Terragrunt Project contains an after_hook that triggers the Python solution stored within the esc-mod directory that will be explained in just a minute. Basically, it loads the terraform output and stores the result within a pre-defined Pulumi ESC environment for later retrieval.

TF-APP

The second configuration is called tf-app to mimic a real application configuration (remember, the scenario's emphasis lies on the separation of concerns between platform and application teams).

All it contains is a variable asking for the DNS Zone's resource id and a resource definition to deploy a DNS record within the zone.

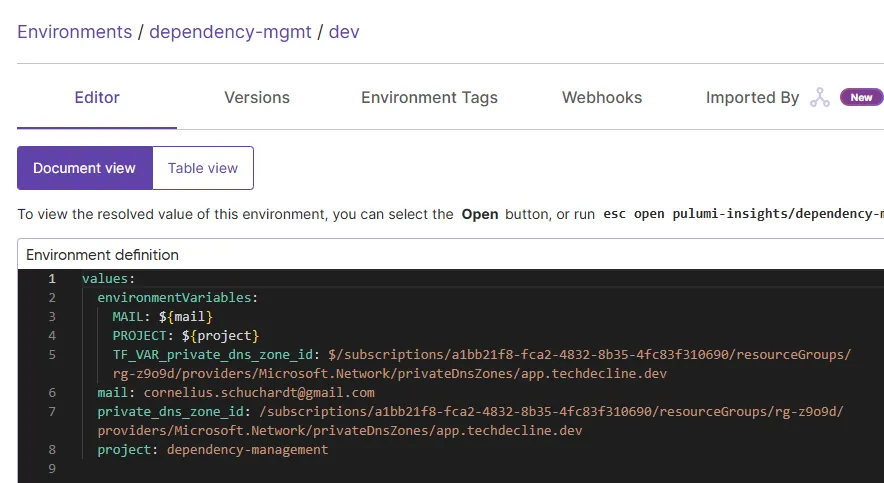

The variable will then be populated from the ESC environment.

ESC-MOD

The directory esc-mod contains a Python project that handles the modification of a given ESC environment. It allows creation and update of values within an environment and provides switches to include environment variables including optional Prefixes (TF_VAR_ for our example).

Process

Prerequisites

For easier consumption, we're going to make the following assumptions:

- You are logged in using Azure CLI

- You are logged in using Pulumi ESC CLI

PULUMI_ENVIRONMENTandPULUMI_PROJECTare set within the current shellaz,uv,esc,terraformandterragruntare available within the environment

Apply TF-DNS

First, we're applying the configuration using Terragrunt from the CLI: terragrunt apply. This both deploys the DNS Zone as well as sets or updates the configuration value within the environment.

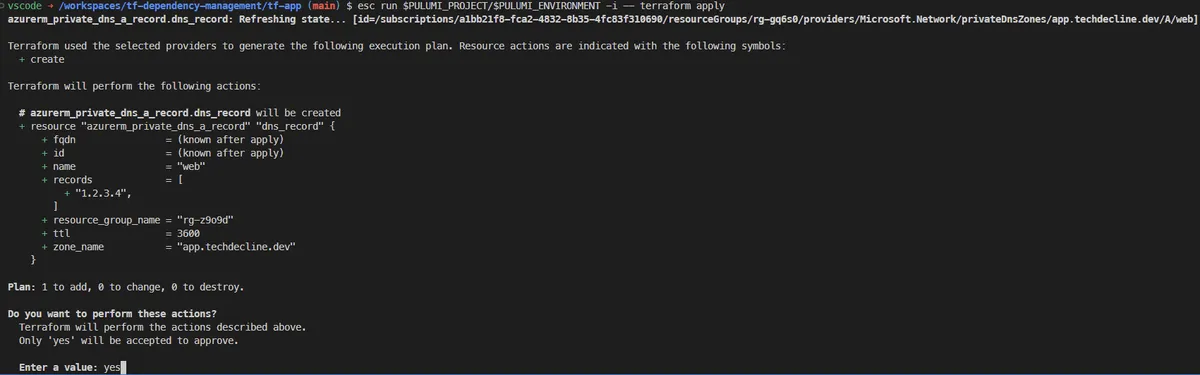

Apply TF-APP

Let's switch to tf-app directory and invoke the second configuration. This time, we'll stick to the Terraform integration guide from Pulumi and invoke the configuration with the following command esc run $PULUMI_PROJECT/$PULUMI_ENVIRONMENT -i -- terraform apply.

If done right, no prompt should ask for the required variable as the value should be fetched from the ESC environment.

Summary

Using a central configuration service like Pulumi ESC allows to de-couple configuration variables from the configuration logic and, as proven within this post, even allows to model dependencies between disconnected configurations way better than the other solutions laid out in part one:

To fulfill the complete vision, the following topics would need some attention going forward (maybe room for another post or two in this series):

- Scheduling and orchestration After updating the environment, web hooks or events could be received by a CI/CD system invoking a pipeline (re-)converging the dependent configuration.

- Interface and contract As both configurations still share the variable name, there is still some room for improvement in regards to coupling (as both configurations need to agree on the variables name). One solution would be to develop a schema for configuration information that would be shared by every configuration.

- Maturity The Python script is merely in a Proof of Concept state and could either be rewritten carefully to improve state handling, logging and overall code quality. Alternatively, one could argue that writing a provider for Terraform (or any other IaC platform) is probably also a good solution if one wants to apply this approach at scale.