Container-Use in Dagger Projects

Context

By definition coding agents, or AI Agents in general are built from a combination of a large language model, a well-defined set of tools exposed using the Model Context Protocol as well as set of user- and system-defined prompts.

Modern AI coding agents typically bundle LLMs, tools, and integrations into vertically integrated platforms, creating vendor lock-in risks for developers and extended lead time when evaluating tools outside the chosen ecosystem.

By decomposing the components of agents using vendor-agnostic tools like Docker Desktop, Container-Use and other container-based technologies, developers can break free from the chains of Big Tech.

Problem

When stacking multiple tools that use containers, you will eventually run in situations that would require Docker-In-Docker, which is usually hard to setup and troubleshoot, resource-intense and undesired in regards to security.

In my case, I wanted to run Dagger commands within Container-Use Environments. Without updating the environment accordingly, this would have required spawning a Dagger Engine within the Container-Use environment.

Solution Overview

To enable integrating Dagger projects with Container-Use, I exposed the Dagger engine running on my workstation to the host system by enabling TCP port forwarding.

Additionally, I configured my development environment to point Dagger towards the exposed engine using the environment variable _EXPERIMENTAL_DAGGER_RUNNER_HOST.

In result, this enabled my coding agent to run Dagger commands within container environments.

Setup

Setup TCP Dagger Engine

After installing Dagger, you need to setup the engine to run and expose itself using TCP ports. I achieved this by creating a docker-compose.yaml file that I run using docker-compose up -f docker-compose.yaml

version: '3.8'

services:

dagger-engine:

image: registry.dagger.io/engine:v0.18.14 # latest version at time of writing

container_name: dagger-engine

privileged: true

ports:

- "1234:1234"

volumes:

- /var/lib/dagger:/var/lib/dagger

command: --addr tcp://0.0.0.0:1234

Install and integrate container-use

For more information how to run Container-Use, see my previous post

Create new Python Dagger Project

Lately, I added mise-en-place to my tool belt to manage development tools and environments. For this post, I followed these steps within my WSL instance terminal for bootstrapping:

- Create a

mise.tomlwithin an empty directory with the following content

[tools]

dagger = '0.18.14' # latest version at time of writing

uv = '0.8.11' # latest version at time of writing

- Create an

.container-use/environment.jsonwithin the directory

{

"workdir": "/workspace",

"base_image": "ubuntu:24.04",

"setup_commands": [

"apt-get update",

"apt-get install -y curl ca-certificates",

"curl https://mise.run/bash | MISE_INSTALL_PATH=/usr/local/bin/mise sh"

],

"install_commands": [

"mise trust",

"mise install",

"mise exec -- dagger develop"

],

"env": [

"MISE_INSTALL_PATH=/usr/local/bin",

"_EXPERIMENTAL_DAGGER_RUNNER_HOST=tcp://host.docker.internal:1234"

]

}

- run

mise trust && mise installlocally to install dependencies - run Dagger bootstrapping

mise exec -- dagger init --sdk=python

Demonstration Dagger Engine Integration

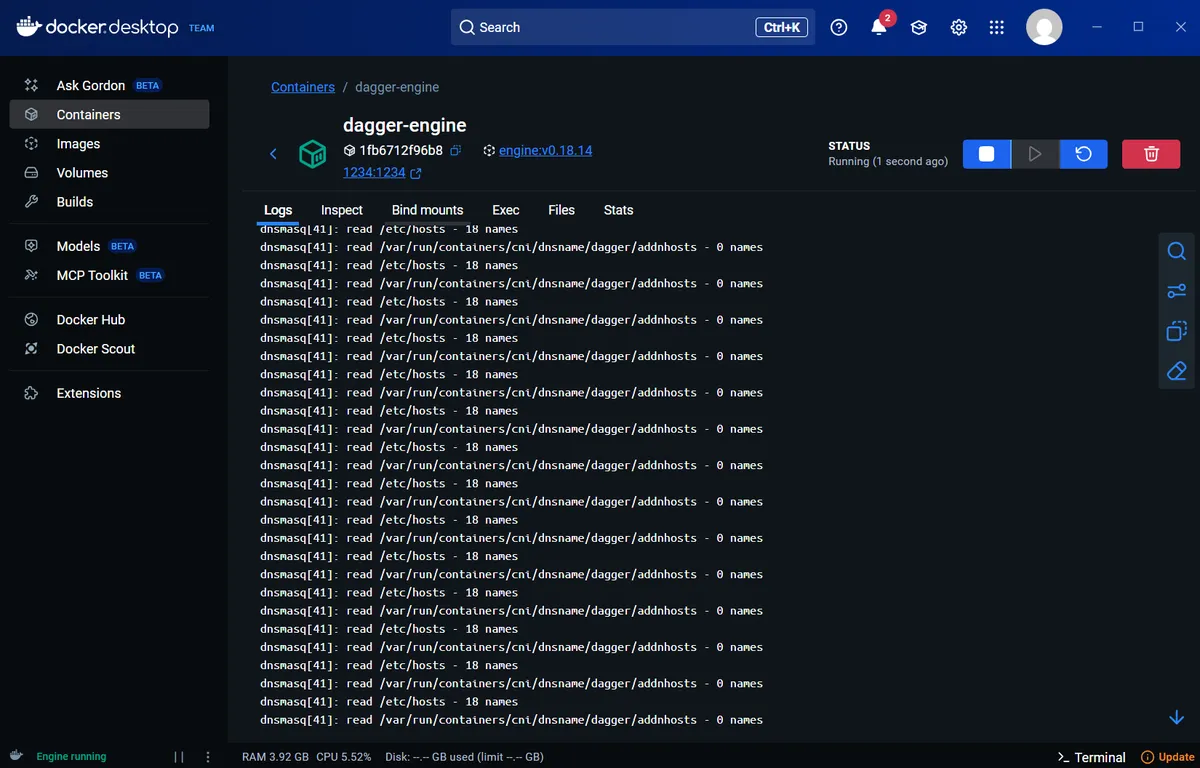

Dagger Engine Logs

Logs of the Dagger Engine Container can easily be monitored using Docker Desktop. After opening Docker Desktop, just select the container running the engine and inspect the logs.

The logs will be continuously displayed and should allow us to verify the setup going forward.

Instruction Prompt

As an academic example, we are going to ask the agent to bootstrap an Open-Tofu Dagger module. Feel free to replace the example with anything else suiting your needs 😎.

This directory contains a newly created Dagger Module using the Python SDK. Long-team vision is to provide a wrapper for [Open-Tofu](https://opentofu.org) CLI commands.

For the first iteration, create a container environment using #container-use and prepare Dagger functions for the following CLI Commands that share CLI parameters (where appropriate). The generated CLI commands should only return the resulting opentofu commands as string output without actually running the commands.

Make sure the solution passes `pyright` type-checks and is correctly formatted and linted using `ruff`.

Finally, use Dagger within the container environment to print the module's documentation to validate your results.

Abort if something is wrong with the container environment and return the error.

After invoking the prompt the agent will start working.

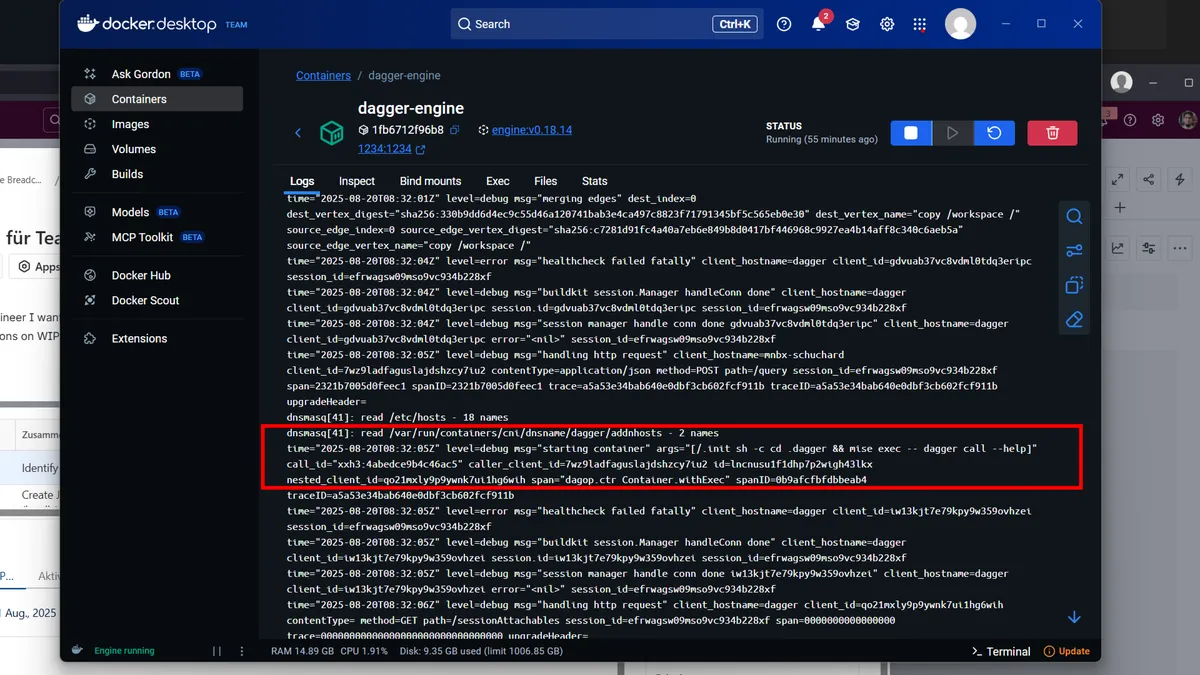

Verification

The Dagger Engine logs will eventually contain references from container-use as shown below.

Results

As shown, exposing the Dagger engine using non-encrypted TCP allows developers to integrate additional container tools like container-use with Dagger without enabling Docker-in-Docker which both conserves resources and makes troubleshooting way smoother.

Conclusion

As observed by people within the industry before me, AI Coding Assistance is still in its infancy and hype phase. This makes it hard for practitioners to keep up with all the news and releases. As I very much enjoy using OpenCode in conjunction with Github Copilot and Claude Sonnet 4 right now, I still don't knows what awaits at the next corner.

So, enabling integration and evangelizing on a mix-and-match culture in general reduces the risk of vendor lock-in and getting left behind. Building bridges based on proven technology enables this.

Independent from the use-case of local development or Dagger in CI/CD environments, I keep struggling with the perfect setup of the Dagger engine (using a beefy VM and SSH Tunneling from local endpoints, using Kubernetes, ..) in professional settings, but at least being able to scale it locally using the setup presented is a nice addition.

After rocking Container-Use for merely two months (decades in the world of LLM), I keep finding new features (like secret integration), yet I also find shortcomings, that I have no solution for yet (volume, directory mounts like .azure/ for authentication), so hold tight for additional posts on this topic.